-

-

Notifications

You must be signed in to change notification settings - Fork 134

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

feature: Add Dockerfile from cli setup, and create production ready docker images #1139

Conversation

|

beautiful work so far. I think the default behavior for docker file support should be |

1bf5c7c to

e8ccada

Compare

|

LGTM on the surface. I don't use docker. Let me know when it's ready to merge. |

5d5869f to

e7ae877

Compare

da28597 to

de9902b

Compare

|

This is ready, it could become simpler if we have an official docker image for aurelia-cli. I've tested it with almost all of the possible combinations. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just a minor change to wording.

`--run` is removed on master because it's not needed by protractor task.

|

Excited to see progress in the area of Docker :) I do see a few small things that affect layer size. There's no cleanup and a few duplicates. e.g. if you changed this: #update apt-get

RUN apt-get update

RUN apt-get install -y \

apt-utils \

fonts-liberation \

libappindicator3-1 \

libatk-bridge2.0-0 \

libatspi2.0-0 \

libgtk-3-0 \

libnspr4 \

libnss3 \

libx11-xcb1 \

libxtst6 \

lsb-release \

xdg-utils \

libgtk2.0-0 \

libnotify-dev \

libgconf-2-4 \

libnss3 \

libxss1 \

libasound2 \

xvfb

# cypress dependencies or use cypress/base images

RUN apt-get install -y \

libgtk2.0-0 \

libnotify-dev \

libgconf-2-4 \

libnss3 \

libxss1 \

libasound2 \

xvfbTo this: #update apt-get

RUN apt-get update && apt-get install -y \

apt-utils \

fonts-liberation \

libappindicator3-1 \

libatk-bridge2.0-0 \

libatspi2.0-0 \

libgtk-3-0 \

libnspr4 \

libnss3 \

libx11-xcb1 \

libxtst6 \

lsb-release \

xdg-utils \

libgtk2.0-0 \

libnotify-dev \

libgconf-2-4 \

libxss1 \

libasound2 \

xvfb \

&& rm -rf /var/lib/apt/lists/*Then all the dependencies are installed (and immediately their temp stuff cleaned up) in one single layer, which should significantly reduce download size/time of the container. Other than that, I'm still not entirely sure of the exact use case so I can't say much about the rest of the script stuff. If you say this works then I'm happy to believe you. Do we have any tests in place to verify this though? Honestly I'd even be fine with a simple bash script that kicks off the container and verifies its output and spits out a non-zero exit code if it fails. Just so long as there is some kind of automated check that the thing works, to prevent regressions in the future, that would be perfect |

This is useful when running in background and unintended mode rather than interactive. There is no need that user bring up the application manually when running e2e tests in headless mode.

This is to test the generated Dockerfile for various aurelia configuration, it does several steps 1. Copies aurelia application inside a docker container 2. Runs unit and e2e test 3. Builds the application for production `au build --env prod` 4. Deploys on nginx 5. Tags the image If all of these steps pass for all defined configurations the new changes had no negative effect on the docker support

You are right, I did not know very much about these cleanups and required tools, I just found them based on research, I changed them to what you suggested tho.

Well, the main purpose is to facilitate CI/CD pipeline for aurelia developers, based on the features they chose for their application. We have a CI/CD environment for our backend services, so we could easily deploy

I am far from disagreeing you. Since this @3cp we need the |

This was accidentally removed

Yep, I get it now (I think). The presence of the Dockerfile had me confused a bit. I thought you wanted to publish docker images to the registry. Now I understand this is just a template for scaffolding.

In terms of quantity / coverage this should be plenty - the main point here is to make sure we're generating working Dockerfiles. As long as it works OOTB, users can always tweak details of installed tools and configs. Can we somehow verify though that the script inside the docker completed successfully? I'm not 100% sure but it seems to me we're now only verifying that aurelia-cli successfully ran the docker command, but if the container itself failed somehow then we wouldn't know, I think. |

Having enough of those generated applications and running the docker file against them, could satisfy that we are creating a robust

Honestly, aurelia-cli does not run the docker, its pure docker command behind the scenes, I just asked npm to run

That would be the purpose of this PR. It could simplify the We could replace these lines for instance with the following: FROM bluspire/aurelia:1.1.0 |

|

I used these configurations to test the Dockerfile is working properly # four scaffolding using Webpack with various configurations

au new first -u -s htmlmin-max,sass,postcss-typical,karma,cypress,docker

au new second -u -s http2,typescript,htmlmin-min,less,postcss-typical,jest,protractor,scaffold-navigation,docker

au new third -u -s dotnet-core,typescript,htmlmin-max,stylus,postcss-basic,jest,protractor,docker

au new forth -u -s http2,dotnet-core,htmlmin-min,sass,postcss-typical,karma,cypress,scaffold-navigation,docker

# four scaffolding using Alameda with various configurations

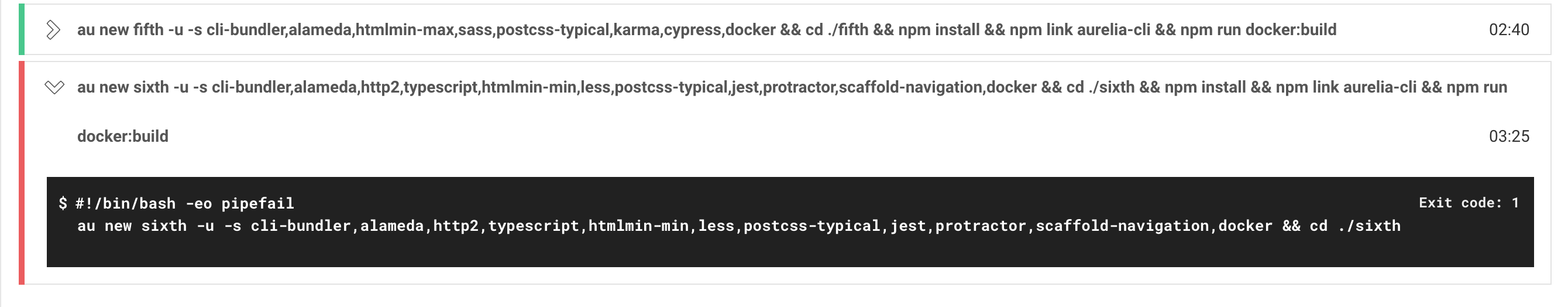

au new fifth -u -s cli-bundler,alameda,htmlmin-max,sass,postcss-typical,karma,cypress,docker

au new sixth -u -s cli-bundler,alameda,http2,typescript,htmlmin-min,less,postcss-typical,jest,protractor,scaffold-navigation,docker

au new seventh -u -s cli-bundler,alameda,dotnet-core,typescript,htmlmin-max,stylus,postcss-basic,jest,protractor,docker

au new eighth -u -s cli-bundler,alameda,http2,dotnet-core,htmlmin-min,sass,postcss-typical,karma,cypress,scaffold-navigation,dockerI am not sure how long it takes for the CircleCI to run all of them, I push these to see what would happen. If you think this is too much of an effort we could remove those which are less common. |

We follow a rule of thumb that CI should complete within 30 minutes on normal commits, but have no time limit (tho in practice up to a few hours max) for whatever precedes a release (e.g. a merge to master). Looks like your latest job took about 18 minutes so that seems fine to me. Covering all combinations is a responsibility of the cli integration tests. I believe these do take a few hours to complete. The docker tests primarily need to make sure that all options (individual features) work, because what those tests are primarily verifying is whether the docker container has the correct dependencies installed and configurations to support aurelia-cli's features.

I didn't see that, thanks for the link. Then that's good. Just wanted to make sure we don't get false positives :) |

|

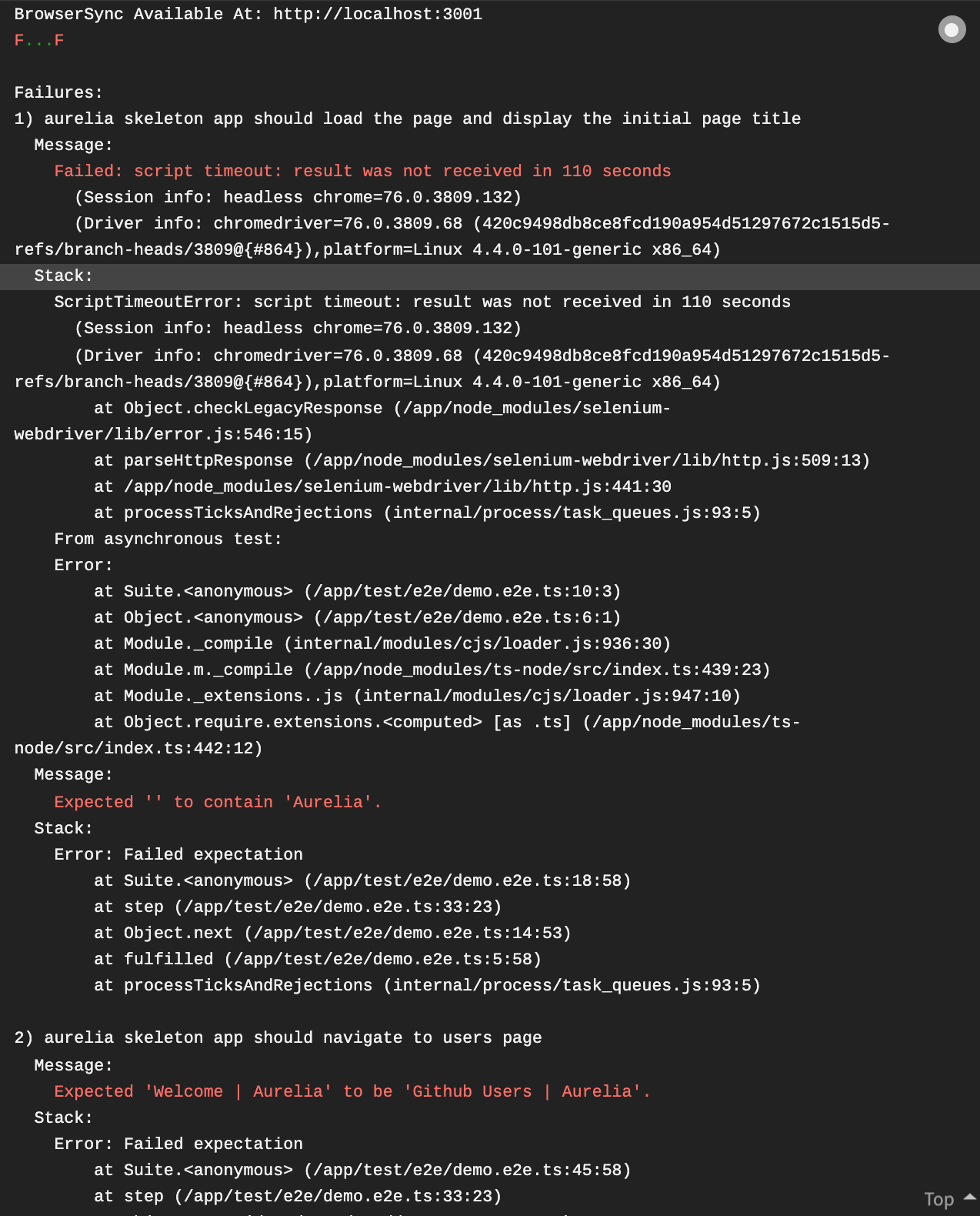

I see one failure, any idea what that might be? How would we investigate such issues? |

I couldn't be agree more.

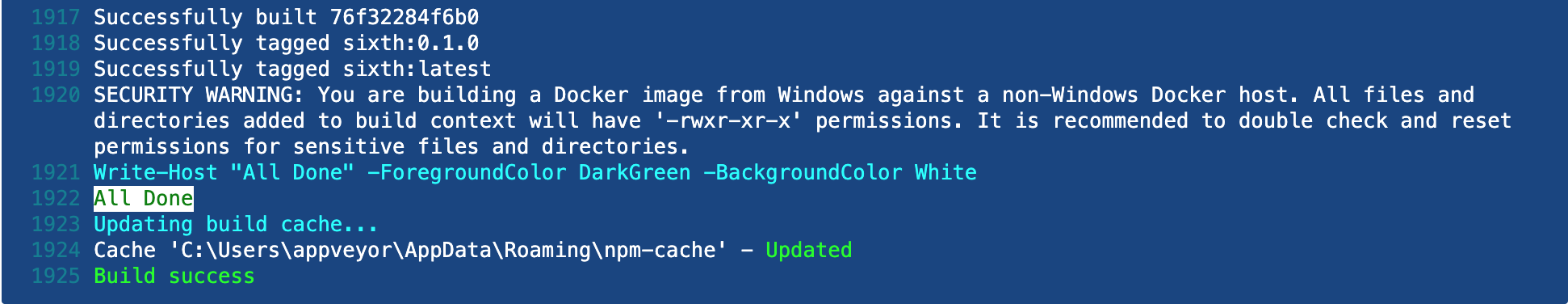

I am not sure it was due to permissions something else, because I faced a situation where CircleCI clears the logs after hours, and you need to re-run the flow. I ran the flow again and think that the failure is owing to the fact that in the sixth configuration when running These are the results from several runs, of that configuration, however, it has also successful hits and to make sure it is not a false positive I ran it on my local docker-machine as well,

As long as CircleCI shows the logs, there are messages out there just like when we run normal Aurelia applications. For now, I leave out the |

The sixth configuration was using the following features : cli-bundler,alameda,http2,typescript,htmlmin-min,less,postcss-typical,jest,protractor,scaffold-navigation,docker

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM thanks @shahabganji !

|

Regarding that sixth configuration, ideally I would like to have that included and passing consistently as well. But, I understand that this may be a false negative or something specific to the e2e setup. The tests prove that in principle the generated Dockerfile works in all core scenarios and that there may or may not be something flaky going on with one particular setup. So what I mean to say is I think this is good enough to merge as an initial version of docker support, but we can keep that 6th configuration (getting that to work) around as a todo for future improvements when you have time. |

|

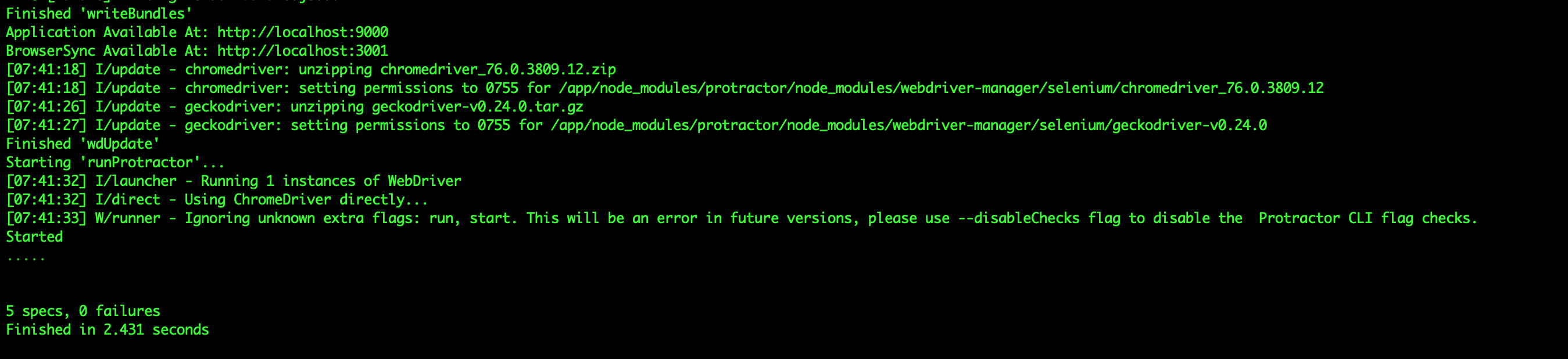

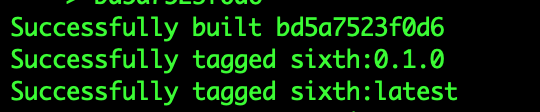

I removed the The part which causes CircleCI to fail: And here is the success message: The reason that I did not move all configurations on AppVeyor is due to the fact that they take longer to run on AppVeyor and it hits the time limit of Appveyor. I pushed the if I missed anything please let me know. 🙂 |

|

If this causes more CI issue, we turn them off in CI :-) |

This Feature enables Aurelia developers to have a basic Docker file based on the configuration they have chosen during the project setup.

The final step asks you whether you like to have a docker file or not, the default answer is set to true though.

Some of the steps in the Dockerfile are depending on #1143, which enables headless runs and overriding port and host for e2e tests.

When done, this will close #1127

Let's start, any ideas for now?

/cc: @EisenbergEffect , @fkleuver